Prelude / Background

Born: Estimated in 2015

Adopted: 2017-12-20

Died: 2024-10-02

Patton was diagnosed with hypertrophic cardiomyopathy on 2022-11-28, which caused the muscle around his heart to thicken. This caused heart failure, meaning that his heart did not pump enough blood to his body, and also caused secondary issues such as lungs filling with fluid and blood clots. For 22 months, we were able to manage Patton’s condition with medication given three times per day and with frequent visits to the cardiologist. Unfortunately, hypertrophic cardiomyopathy is a progressive disease with no cure.

Eulogy

Patton, you were an amazing cat. You filled every room with such love and joy. I remember the first time that I met you. I was looking to adopt a cat who would want to go outside and not get stuck living in a small apartment. I remember meeting many wonderful cats at Peter Zippi as I walked through several rooms with many playful cats. You were in the last room that I visited. You were sitting on top of a cat climber basking in the sunlight. You were recovering from your surgery, where all of your teeth had been extracted due to your stomatitis. I had been told that you had been kept in the city pound for the prior six months as evidence for a court case. During this time, you were blocked from receiving the proper medical care while being kept in a small cage. Despite this, when I came into the room to visit, you came alive. You perked up and wanted to be pet, and then, after a few moments, you stood up and walked over to the door. I could tell that you were ready to leave. I could tell that you wanted a better life and that you were tired of being trapped indoors.

While we did not adopt you that day as you were still recovering from your surgery, I came back to visit you a second time and then adopted you on the third visit.

I don’t think you knew what you were getting yourself into at the time, but I hope that you were ready for the adventure. I remember that on the car ride home, you sat in my lap and looked out the window. Even from when I first met you, you were always a curious cat and liked seeing the world around you, and you never liked staying in your cat carrier.

When we got home that first night, you walked around my bedroom smelling the different things and ate some food. Eventually, you decided to hide under my desk. You were in a new environment with new people who you did not know well, and I could understand you being scared. However, once I went to sleep and turned off the lights, you jumped onto the bed and put your but into my face to cement the relationship in the standard cat way. You then slept in the bed all night, and we have been inseparable ever since.

Within a few weeks of adopting you in southern California, we flew back to Maryland. I was entirely unprepared for the flight with you the first time. I had you in a standard cat carrier and planned to keep you under the seat during the flight—as is typically done. However, shortly after the flight took off, you started panicking and thrashing around to get out of the cat carrier. I had to hold you in my lap for the entire flight, rocking you back and forth for the whole of the 6 hours of the flight to try and keep you calm during the flight.

Eventually, once the airplane landed, you calmed down. In the taxi from the airport, you were a curious cat and looked out the window the entire way. Again, once we arrived at my apartment, you immediately acquiesced. You were always impressive in how adaptable you were to new environments, with this being the second new place that you were introduced to within a matter of weeks of having been adopted.

Within the first month of adopting you, I started training you to walk on a leash. You never did like the harness, however you did like going outside. Amazingly we were able to start with some basic walks around the building within a few weeks. I think that you enjoyed being able to smell the plants and scratch the trees, as a normal cat does.

I even dragged you outside of the apartment so that you could experience snow for the first time. I think you thought I was crazy for taking you outside when it was cold and wanted to go back inside to the warm apartment.

When we weren’t out walking, you spent your days watching the birds at the bird feeder or relaxing on the bed or your cat climber.

After several months of being in Maryland together, we returned to California for a week-long vacation. This, of course, meant that I took you on an airplane for the second time. However, this time, I was much better prepared. I had registered you so that you could sit on my lap during the flight and not have to stay in the cat carrier. While you panicked when the airplane took off, you calmed down within the first 10 minutes of the flight and spent most of the flight looking out the window and enjoying being pet.

While flying was never enjoyable, you did prefer flying and coming along on vacations to being left behind. In total, you took around a dozen flights, each around 6 hours long, flying back and forth across the country. You often got compliments from the flight attendants for being so well-behaved on the flights.

Traveling with me meant that you got to experience lots of different places. Often, we would just walk around the different walking trails in the area.

I think one of your favorite places that we traveled to was Washington State. There, we spent hours a day walking around in the forested areas. You would walk up to 4 miles per day maintaining about 1 mile per hour.

When you got tired, you would meow and ask to be carried in the cat carrier backpack that I had. You would rest in the backpack while still looking out at the trees.

You even hiked several miles up a mountain to the top of Poo Poo Point and got to look out at the sunset.

The true winners of the COVID lockdowns were pets; You were undoubtedly included in this. During the lockdowns, I spent all of my time with you. During the day, I would take you and my laptop to the park. You would enjoy climbing in the trees and chasing squirrels. A few times, I lost you for several minutes as you were hiding in the bushes. When you were tired or it was too hot, you would come and sit beside me in the shade.

After nearly a year of it being the two of us hanging out, I decided to fly back to California so that we could be around family, as the COVID lockdown seemed to be continuing without end. I tried to prepare us the best that I could for the airport. I tried to modify a mask so that you could wear it while on the airplane, but I was ultimately unsuccessful in finding something that you were willing to tolerate.

Once, back in California, we ended up staying in California for several months as the COVID lockdowns continued. There, you got to go run around outside in the backyard yourself every day.

You became friends with Blacky, who, up till that point, was a feral cat who rarely interacted with humans. I think that you taught Blacky how to be a domesticated cat and how to enjoy hanging out around humans. I think that Blacky taught you how to catch lizards and other small animals in the backyard. Because of your influence on Blacky, Blacky is now a fully domesticated cat who spends his nights sleeping and relaxing indoors.

Because you didn’t have any teeth, when you caught lizards or birds, you would rarely kill them. Often, you would bring the lizards into the house and let them lose. I think that there were a few lizards that you probably caught and released multiple times as a result.

After several months of staying in California, the COVID restrictions started to lessen, and I decided that we should go back to Maryland. I am sure that you missed your days playing in the backyard in spending your days with Blacky.

Thankfully, you didn’t have to wait too long before your family grew again. Within a few weeks of being back, you met Jojo.

That first week there was a lot of growling as you were a bit stunned to have another cat in your space.

However, you eventually became friends with Jojo.

We started leaving the patio door open all of the time. During the day, you two would always stay out on the patio watching the birds and enjoy the sun.

When it was cold or rainy, you would complain and ask for a nice warm day.

For a few months, you were also friends with Professor Meatball.

However, Professor Meatball was already a very old dog by the time you met her, so she was not around for too long in your life. After Meatball passed, your family changed again with the adoption of a new puppy. We adopted Lollipop. This changed your family’s dynamic again with a rambunctious puppy chasing you and Jojo around all of the time. On the day that we adopted Lollipop, I have the following photo of you and Jojo looking at each other questioning what is this new puppy that has just entered your home.

As always, you adopted Lollipop into your family. And You, Jojo, and Lollipop spent your days together hanging out. Lollipop even learned some cat-like behavior from interacting with you. Lollipop learned to tap others on their head with her paw from you. Lollipop also learned to lick herself clean like a cat.

You were also the only one of our pets who was calm enough that I was able to take a picture with you and Gerbil the Hamster.

While you were always loving and were the most friendly cat who loved your family, you always had health complications. When I first adopted you, you had recently had all of your teeth extracted due to stomatitis, causing your mouth to be inflamed. While this helped your mouth be less inflamed, we still had to be careful with your mouth. For a while, we went to the vet as frequently as once a month to get laser therapy to reduce the inflammation in your mouth.

For several years, you did not have any active health complications, and you were a healthy cat. However, your health didn’t last. On 2022-11-28, you were diagnosed with hypertrophic cardiomyopathy and heart failure. I noticed that you had seemed less energetic than your usual self and that you seemed to be isolating yourself a lot more than usual. Usually, when you were uncomfortable, it corresponded with your mouth being inflamed. However, this time, your mouth did not appear inflamed at all. We got you a blood test to see if we could find anything that might be informative. It took a few days to get the results, but it came back that your ProBNP was off the charts with 1500, indicating that your heart was significantly stressed. We got an appointment with the cardiologist a week later. They found small clots in your bloodstream and that your heart’s lower chamber was nearly twice as large as it should have been. I was told that you would be lucky to live six months, given your condition at the time. You managed to live for 22 months.

I gave you your medication three times per day as it seemed to help you stay stable and able to breathe comfortably throughout the day. You even learned when it was time for your medication and would come and remind me.

We went to the cardiologist every three months. Every time that I took you to the cardiologist and we adjusted your medications, it was like you got a new lease on life. While we did not keep doing long walks outside when you were feeling well, you would ask to go outside. We would walk around the block together, and you would enjoy smelling the plants. I would also take you to the park, and you would enjoy running around in the bushes for a few minutes before you would get tired and sit down somewhere to rest.

At last, your health could not be maintained forever. The few days before you died, you had started to have a harder time breathing. One of the side effects of hypertrophic cardiomyopathy is that your lungs fill with fluid. We tried to maintain the fluid build up the best we could with medication, however, with the medications it was a balancing act between what your lungs needed to drain the fluid, and what your kidneys were able to tolerate. When the fluid buildup becomes too great, the option is to drain the fluid from your lungs using a syringe in a procedure called thoracocentesis. I had wanted to avoid the procedure for as long as possible as the procedure comes with lots of other complications, such as requiring anesthesia, and it would potentially require that the procedure be repeated as frequently as every two weeks.

Unfortunately, the time eventually came when we could no longer address the fluid buildup with medication alone. On the morning of October 2nd, 2024 I took you to the pet emergency room to have the fluid drained from your lungs. The day before, you seemed very uncomfortable as you were having difficulty breathing. I was up most of the night watching you sleep. Your breathing was very heavy.

On the way to the pet emergency room, you were in the carrier as you always were, looking out the window at the trees went by as you always did. I was hopeful that this would be just like the earlier vet visits, where afterward, you would feel better just like you always did after a vet visit. I think that you were similarly hopeful that you would feel better after the vet visit, as that is what usually happened.

When we got to the pet emergency room, we were seen immediately. Your blood oxygen levels were faily low. I got to hold the oxygen tube up to your nose for several minutes. You enjoy that. Even being hooked up to the various sensors, you were still a happy cat. You wanted to be pet, and I could tell that you were trusted me to take care of you.

You died shortly after the procedure at 11:50 am on October 2nd, 2024.

You lived as long as you could, and you enjoyed life right up to the last moment. You kept going outside and enjoying the outdoors, playing with and loving your family. I think that you left right as keeping you alive started to become painful.

Here is the last photo that I took of you when you were alive on the afternoon of October 1st, 2024.

Miscellaneous

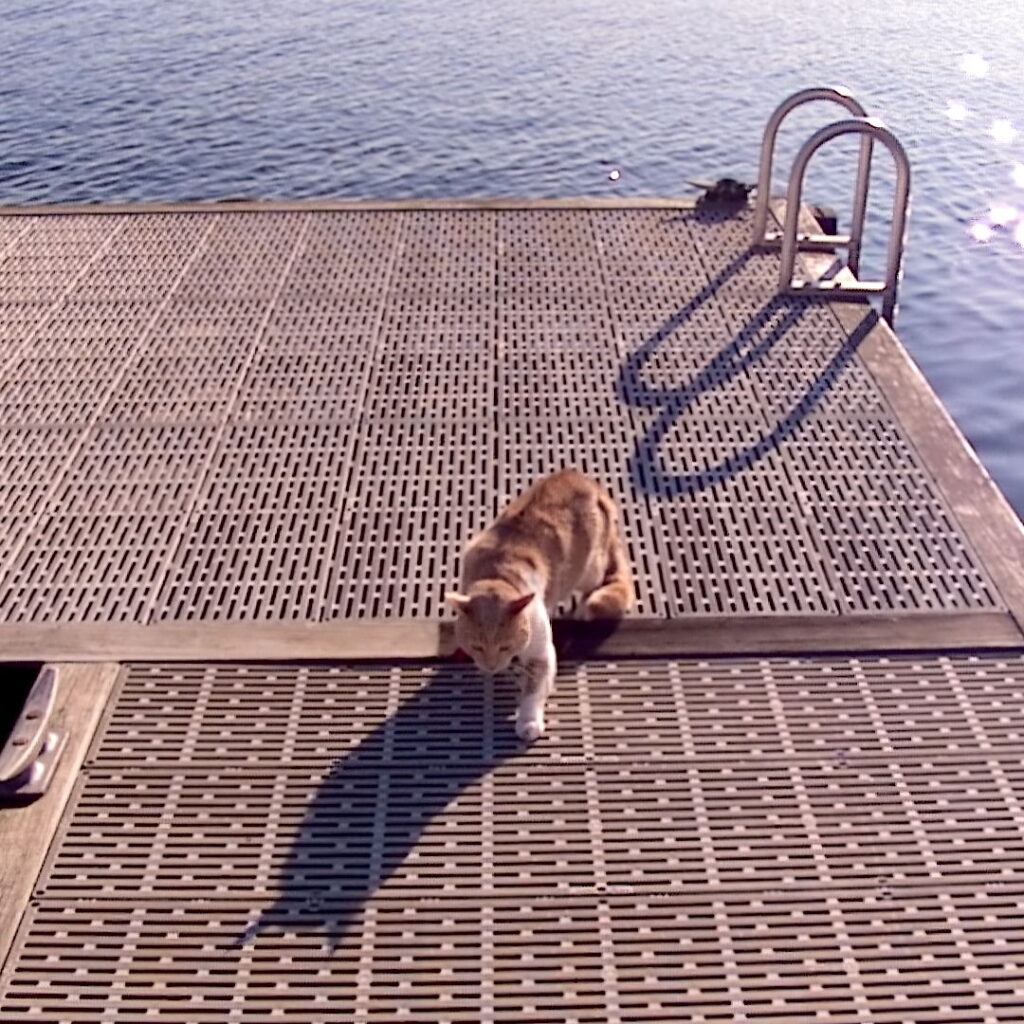

Patton at a campfire near the water in Washington state.

Patton on the dock in Washington State.

Going down a slide at a park with Patton. Patton was a very confused cat that day.

Patton, you always loved to play fetch with toy mice. The toys would be scattered around the apartment at all times. Unfortunately, Lollipop started destroying all of the toys and we could no longer leave them around.

Goodbye Patton, I love you.