I have set up a mailing list up at https://groups.google.com/group/jsapp-us this will server as a support center and a basis for development

Large update JsApp.US

Today I pushed out a large number of updates for JsApp.us.

First there are a few new features that everyone should know about: (these first two should sound like what appjet was before it closed)

- People can make libraries public to be imported by other people using the service. To make a file public see the files command and click the public link.

- There is a new profile view that is convenient place with links to all of the users applications deployed as wells as all public files so that others can shop around for the modules they are looking for. (Self promotion: http://jsapp.us/p/matthewfl)

- There is also a share command that few people have discovered, it allows one to share a current version of a files, this is greatly useful for things such as writing blog entries about node.js or getting help with something that is not working.

- And finally JsApp.us is now open source at github, so if you find a bug that you want fixed, or want to build the next cool feature fork it

JSApp.US

It seems a little strange that I have not yet written a blog entry on my own project JSApp.US

For those that do not know what it is, it is a platform to write applications quickly in Javascript using the node.js platform and then quickly deploy these applications to the web. To some extent this is my attempt to relive the days of appjet, but with a new and more powerful platform.

At it stands now, it is my most popular site ever, it laded its self to the reddit/programming page, and was on the top of hackernews for about 3 hours. It seems to be very successful for now and even I have a few applications that are using it, such as count.jsapp.us, it is powering the little visitor count that I have placed on the side bar.

There are a few new features that I am still planing to implement. The first feature is going be a sorta profile that will allow for people to “show off” their applications that they have on JSApp.US. I also have plans to make it so that one can require in files from other users. This should end up like how github handles it with users, the basis will look like: require(‘other_user/file.name’).

I married a widow (let’s call her wilma)…

I married a widow (let’s call her wilma) who has a grown-up daughter (call her dolly). My father (frank), who visited us often, fell in love with my stepdaughter and married her. Hence my father became my son-in-law and my step-daughter became my mother. Some months later my wife gave birth to a son (sammy) who became the brother-in-law of my father. as well as my uncle. The wife of my father, that is, my step-daughter, also had a son (steven)

Dropbox as back-end for git

I have been using Dropbox for a good amount of time now, and something that I have recently started using Dropbox for is as a back-end system for private git repositories. While Github is great for public repos, if you just want to have a private project synced between a few computers or with another friend on Dropbox then the steps are more or less straight forward.

1) Open up a terminal and cd into your dropbox folder,

2) You have to make a bare git repository that is used to hold all of the git objects, this can be done by issuing the “git init –bare repo.git” command. This will make a new directory called repo.git with all the supporting files.

3) At this point all that is left to do is link up your working git directory with the Dropbox one, the easiest way to do this is cd into the repo.git directory. Then Issue the ‘pwd’ command, this will give you the absolute path (git will not use relative paths for some reason). Then copy the output from pwd and then cd into your working directory, and issue the command “git remote add origin file://[Paste in what ever came from pwd]”

4) Now that your working directory is linked with the directory on Dropbox issue the command “git push origin master” to load all your files into the dropbox. One thing that you will notice is that this will run a lot faster than when working with a remote repo over the internet. The reason is that you are not pushing files onto the intern but into another folder on your computer. If you look at you dropbox icon at this point you will see that it is syncing a number of files. If you have worked up a larger number of changed between syncing with dropbox you might see that it is syncing in excess of 200 files with the server.

5) To load the project onto another computer you can use the “git clone file://[path to the dropbox repo.git folder]” One thing to note before pulling any changes is to make sure that dropbox has finished syncing all of the files. If you trying and pull or clone out of the Dropbox directory before it is done syncing then you can run into problem as git will look for files that are not there yet as dropbox has not finished syncing, so as a not of caution, wait for dropbox to finish syncing before cloning or pulling.

When you want to share a project with someone else, then all you have to do is share the repo.git folder with that person, they can then run a clone command of that file to make their own working directory. While this does give an easy way to have free private 2 GB of git syncing space, there is no cool Github like web interface for looking at code. And as another note, anyone that can modify the repo.git folder will be an ‘admin’ to the project as all the server is really is just syncing file between computers.

June 12, 2nd day of SAS

Again on the Semester at sea there has all ready been a great deal. This time I did get up in time for breakfast.

After breakfast I went to the lecture by Sandra Day O’Connor about the history of the high court I had the joy of being able to eat lunch with Sandra Day O’Connor.

June 11, 2nd day of summer break

This is the seconds day of summer break and I am spending at least the first part on the semester at sea, for 4 days are their “Global engagement forum.”

This has been there first day of there really being any actives. My day started with getting up really late and missing breakfast. Then I went and lissioned to Julian Bond talk for about 90 minutes about the past. After all of this I then found myself at lunch. Right after lunch I was in somewhat of a private interview with Julian Bond finding out even more of his personal view. Then that quickly lead to the second lecture about the sustainability of society with three experts. Followed with a 45 minutes panel of Q&A. I must admit that I was falling asleep during this event as I am still adjusting to the 3 hours off from California time. After coming out of a nap I went to dinner and then to a viewing of Beyond the call, followed by a Q&A with the basically everything of the movie.

What a day.

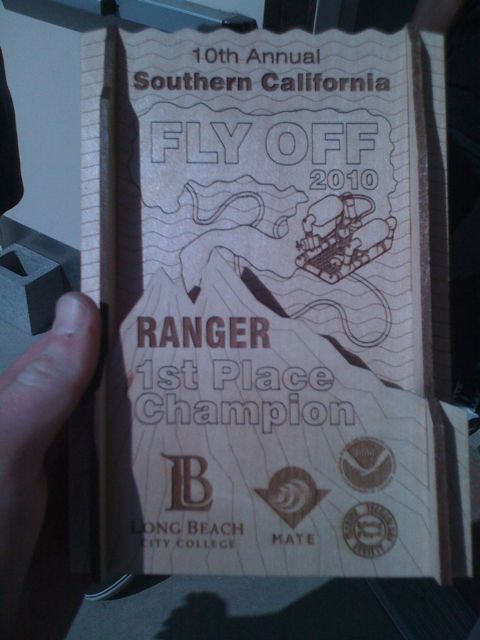

Rov regional

Today was the rov regional. I was amazed that we were able to get first place. (Good job everyone) we have a lot more work to get through before the international competion in Hawaii.

We are wanting to rebuild the whole robot before we end up going. Hopefully this next time we can have a better control system to show off. I am really hoping to get the victors to be able to get fine motor cotrol. But I am not sure how well running the motors at half power on the rov will work. As the system seemed to lack a good deal of responseness when working with the switches.

Feeling a little better about rov

I am now (11 pm) leaving Laurns house as we are done for the night with rov. I feel a littler better about the state of the rob as we were able to drive it around today. This was the first time that we have tested in a pool with the control system and not a moment too late with the robotics competition this Saturday.

We are going to need to spend a lot more time working on it as now.

I think one thing to remember is that we blew a 20 amp fuse at 12 volts and used aluminum foil to be able to run the system but it worked

The state of ROV to come

This coming weekend is the ROV competition. I do not feel that our team is remotely ready, we could use another weekend. We have not even tested and the control system is not 100%. The victors (the one part for the control system that I am trying to get from robotics) are not working. I have 5 and I am sure that 2 are broken. I am not sure about the other 3, but we need to get 5 to run the system. And that is without any backup. So if anything breaks we will be screwed.

We are also the first team to go in the day which means that there will be no time at the robotics competition if worst comes to worst and we need to make a change to the system.

I have been really feeling the stress from ROV myself as I am the only one working on the control system and the problems with the victors have been a really hair puller. Along with having the lack of a control system I am also somewhat having to deal with cameras getting put on to the system. Then to top if off, two of the people that do a good deal of the work on the physical ROV will not be able to go. So if anything was to break we are going to then end up in a real mess.

Vex world 2010

This last weekend was the vex robotics world. Our school sent 3 of our best teams. I am happy to say that my team (687D) was our schools best. We were really thinking that we would get in to the finals as we were talking a decent amount to the top eight teams.

I think that in our two other teams (687B & 687K) are fired up for the next year of robotics.

We will be back. We will chose from the top eight. And we will win.

FRC 2010 San Diego

This last weekend we were down at San Diego as a team. Overall we did great. Our admin team won an award. Also our robot got 3th place in the qualifiers.

In the Simi-finals we were joined with the 2nd place team but beat by the 3th alliance who ended up winning the tournament.

FRC 2010 opening

Yesterday was the opening of the FRC 2010 robotics competition. The game this year is center around socker for the main purpose of being easy for people that have not study the game (parents and and people that just want to watch) be able to follow it.

I think that one problem with this game is that the rule are a little to limiting and so that there is really one or two types of robots that are really going to work for this competition, and so ever team will have some variation of a theme.

Why we love America

Awards’!

For those unfamiliar with these awards, they are named after 81-year-old Stella Liebeck who spilled hot coffee on herself and successfully sued the McDonald’s in New Mexico where she purchased the coffee. You remember, she took the lid off the coffee and put it between her knees while she was driving.

Here are the Stella’s for the past year:

7TH PLACE :

Kathleen Robertson of Austin , Texas was awarded $80,000 by a jury of her peers after breaking her ankle tripping over a toddler who was running inside a furniture store. The store owners were understandably surprised by the verdict, considering the running toddler was her own son.

6TH PLACE :

Carl Truman, 19, of Los Angeles , California won $74,000 plus medical expenses when his neighbor ran over his hand with a Honda Accord. Truman apparently didn’t notice there was someone at the wheel of the car when he was trying to steal his neighbor’s hubcaps.

Go ahead, grab your head scratcher.

5TH PLACE :

Terrence Dickson, of Bristol , Pennsylvania , who was leaving a house he had just burglarized by way of the garage. Unfortunately for Dickson, the automatic garage door opener malfunctioned and he could not get the garage door to open. Worse, he couldn’t re-enter the house because the door connecting the garage to the house locked when Dickson pulled it shut. Forced to sit for eight, count ’em, EIGHT, days on a case of Pepsi and a large bag of dry dog food, he sued the homeowner’s insurance company claiming undue mental anguish.

Amazingly, the jury said the insurance company must pay Dickson $500,000 for his anguish. We should all have this kind of anguish. Keep scratching. There are more…

4TH PLACE :

Jerry Williams, of Little Rock , Arkansas , garnered 4th Place in the Stella’s when he was awarded $14,500 plus medical expenses after being bitten on the butt by his next door neighbor’s beagle – even though the beagle was on a chain in its owner’s fenced yard. Williams did not get as much as he asked for because the jury believed the beagle might have been provoked at the time of the butt bite because Williams had climbed over the fence into the yard and repeatedly shot the dog with a pellet gun.

Grrrrr. Scratch, scratch.

3RD PLACE :

Amber Carson of Lancaster , Pennsylvania because a jury ordered a Philadelphia restaurant to pay her $113,500 after she slipped on a spilled soft drink and broke her tailbone The reason the soft drink was on the floor: Ms. Carson had thrown it at her boyfriend 30 seconds earlier during an argument. Whatever happened to people being responsible for their own actions? Scratch, scratch, scratch. Hang in there; there are only two more

Stellas to go…

2ND PLACE :

Kara Walton, of Claymont , Delaware sued the owner of a night club in a nearby city because she fell from the bathroom window to the floor, knocking out her two front teeth. Even though Ms. Walton was trying to sneak through the ladies room window to avoid paying the $3.50 cover charge, the jury said the night club had to pay her $12,000…oh, yeah, plus dental expenses. Go figure.

1ST PLACE : (May I have a fanfare played on 50 kazoos please)

This year’s runaway First Place Stella Award winner was Mrs. Merv Grazinski, of Oklahoma City , Oklahoma , who purchased a new 32-foot Winnebago motor home. On her first trip home, from an OU football game, having driven on to the freeway, she set the cruise control at 70 mph and calmly left the driver’s seat to go to the back of the Winnebago to make herself a sandwich. Not surprisingly, the motor home left the freeway, crashed and overturned. Also not surprisingly, Mrs. Grazinski sued Winnebago for not putting in the owner’s manual that she couldn’t actually leave the driver’s seat while the cruise control was set. The Oklahoma jury awarded her, are you sitting down, $1,750,000 PLUS a new motor home. Winnebago actually changed their manuals as a result of this suit, just in case Mrs. Grazinski has any relatives who might also buy a motor home.

Are we, as a society, getting more stupid…? Ya think??!!

More than a few of our judge’s elevators don’t go to the top floor either!

Cams middle school vex competition

Today was the cams vex compition for the middle school teams that wee mentored by the cams robotics team. I would like to say that there were many teams out there (39 teams), and that without these teams we would not have had the great turnout. I would last like to say that being the MC for most of the day was a lot of fun and compaired to last years middle school compition.

Also today we had two cams teams that went to antother compition, from what I hear one team was knocked out in the qualifying round but wine the programming chalange.